bull, cluster, comlink, pm2, threads, and web-worker are all tools that help JavaScript applications handle concurrency, parallelism, or process isolation—but they solve very different problems in distinct environments. bull is a Redis-backed queue system for deferring and distributing work in Node.js. cluster and pm2 manage multiple Node.js processes to utilize multi-core systems—cluster is a built-in Node module, while pm2 is a production process manager with clustering, monitoring, and restart capabilities. On the frontend, comlink, threads, and web-worker enable off-main-thread execution: web-worker provides a minimal wrapper to create Web Workers in browsers or Node (via worker_threads), threads offers a higher-level API for shared-memory threading in Node.js using worker_threads, and comlink simplifies communication between main threads and workers by abstracting postMessage into async/await-friendly proxies. These packages span backend job processing, process orchestration, and frontend/browser-based parallelism.

Managing Concurrency and Parallelism in JavaScript: bull vs cluster vs comlink vs pm2 vs threads vs web-worker

JavaScript is single-threaded by design, but real-world applications often need to do more than one thing at a time—whether it’s handling thousands of requests, processing large datasets, or keeping the UI smooth during heavy computation. The packages bull, cluster, comlink, pm2, threads, and web-worker all address this challenge, but they operate in different layers (backend vs frontend), different environments (Node.js vs browser), and solve different problems (queuing vs process management vs worker communication). Let’s break down how and when to use each.

🧩 Core Problem Domains: What Each Package Actually Solves

Before comparing APIs, it’s crucial to understand what problem each tool is designed for:

bull: A job queue system. It defers work to be processed later, possibly by other processes or machines, using Redis as a durable message store.cluster: A process forking utility. It lets a single Node.js app spawn multiple OS processes to handle incoming network traffic across CPU cores.pm2: A production process manager. It runs, monitors, clusters, and restarts Node.js apps automatically, with CLI and programmatic APIs.web-worker: A cross-environment worker factory. It creates Web Workers in browsers andworker_threadsin Node.js using the same API.threads: A high-level threading library for Node.js. It wrapsworker_threadswith promises, shared memory support, and easy data transfer.comlink: A communication abstraction for workers. It turnspostMessageinto async/await calls, making workers feel like local modules.

These aren’t interchangeable—they belong to different categories. Confusing them leads to architectural mistakes (e.g., trying to use bull for UI responsiveness).

🖥️ Environment Compatibility: Where Each Package Runs

| Package | Browser | Node.js | Requires Redis? |

|---|---|---|---|

bull | ❌ | ✅ | ✅ |

cluster | ❌ | ✅ (core) | ❌ |

pm2 | ❌ | ✅ | ❌ |

web-worker | ✅ | ✅* | ❌ |

threads | ❌ | ✅ | ❌ |

comlink | ✅ | ✅* | ❌ |

* web-worker and comlink work in Node.js only when paired with a worker_threads polyfill or bundler config that maps Web Worker APIs to Node equivalents.

💡 Key insight: If you’re building a frontend app, your options are limited to

web-workerandcomlink. If you’re in Node.js, all six are technically available—but only some make sense for your use case.

⚙️ Basic Usage: Spawning Work or Workers

Let’s see how each package starts its core unit of work.

bull: Enqueue a Job

// producer.js

import Queue from 'bull';

const emailQueue = new Queue('emails', 'redis://127.0.0.1:6379');

// Add a job to the queue

await emailQueue.add({ to: 'user@example.com', subject: 'Welcome!' });

// processor.js

emailQueue.process(async (job) => {

const { to, subject } = job.data;

await sendEmail(to, subject); // actual work happens here

});

cluster: Fork Child Processes

// server.js

import cluster from 'node:cluster';

import http from 'node:http';

import { availableParallelism } from 'node:os';

if (cluster.isPrimary) {

const numCPUs = availableParallelism();

for (let i = 0; i < numCPUs; i++) {

cluster.fork(); // spawns a new worker process

}

} else {

// Worker process: start HTTP server

http.createServer((req, res) => {

res.writeHead(200);

res.end('Hello from worker!');

}).listen(8000);

}

pm2: Start and Cluster an App

// app.js

import http from 'node:http';

http.createServer((req, res) => {

res.writeHead(200);

res.end('Hello from PM2!');

}).listen(8000);

Then run via CLI:

# Start with 4 clustered instances

pm2 start app.js -i 4

Or programmatically:

// launcher.js

import pm2 from 'pm2';

pm2.connect((err) => {

if (err) throw err;

pm2.start({

script: 'app.js',

instances: 4,

exec_mode: 'cluster'

}, (err, apps) => {

pm2.disconnect();

});

});

web-worker: Create a Worker

// main.js

import { Worker } from 'web-worker';

const worker = new Worker(new URL('./worker.js', import.meta.url));

worker.postMessage('Hello');

worker.onmessage = (e) => console.log(e.data); // 'World'

// worker.js

self.onmessage = (e) => {

self.postMessage('World');

};

threads: Spawn a Thread

// main.js

import { spawn, Thread, Worker } from 'threads';

const hasher = await spawn(new Worker('./hasher'));

const hash = await hasher.sha256('secret');

await Thread.terminate(hasher);

// hasher.js

import { expose } from 'threads/worker';

expose({

sha256(input) {

// compute hash...

return computedHash;

}

});

comlink: Proxy a Worker

// main.js

import * as Comlink from 'comlink';

const worker = new Worker(new URL('./api.js', import.meta.url));

const api = Comlink.wrap(worker);

const result = await api.expensiveCalculation(42); // feels local!

// api.js

import * as Comlink from 'comlink';

const obj = {

async expensiveCalculation(n) {

// heavy CPU work...

return n * n;

}

};

Comlink.expose(obj);

🔁 Communication Patterns: How Data Moves

How you pass data between units of work varies dramatically:

bull: Jobs carry JSON-serializable data. Workers pull jobs from Redis.cluster: No direct communication. Use IPC (process.send()) sparingly, or external stores (Redis, DB) for coordination.pm2: Similar tocluster; relies on external coordination or PM2’s built-in pub/sub (pm2.sendDataToProcessId()).web-worker: RawpostMessagewith structured cloning (no shared memory by default).threads: Supports structured cloning andSharedArrayBufferfor zero-copy data sharing.comlink: AbstractspostMessageinto async method calls—no manual event handling.

Example: Passing Large Data

With threads, you can share memory:

// main.js

import { Pool, spawn, Transfer, Worker } from 'threads';

const pool = Pool(() => spawn(new Worker('./processor')));

const buffer = new SharedArrayBuffer(1024);

const view = new Uint8Array(buffer);

// ... fill buffer ...

const result = await (await pool.queue()).process(Transfer(buffer, [buffer]));

With comlink, you still pay serialization cost unless you use TransferHandler:

// main.js

import * as Comlink from 'comlink';

Comlink.transferHandlers.set('shared-buffer', {

canHandle: (obj) => obj instanceof SharedArrayBuffer,

serialize: (obj) => ({ /*...*/ }, [obj]),

deserialize: (data) => /*...*/

});

const result = await api.process(buffer); // now transfers ownership

web-worker gives you no help—you must manage Transferable objects manually.

🛠️ Operational Concerns: Monitoring, Reliability, and Lifecycle

bull: Provides job states (waiting, active, completed, failed), retries, backoff strategies, and pause/resume. Monitor via Bull Board or custom UIs.cluster: No built-in monitoring. If a worker dies, you must detect and respawn it yourself.pm2: Built-in uptime tracking, log streaming, health checks, auto-restart on crash, and graceful reloads (pm2 reload).web-worker/threads/comlink: No lifecycle management beyond.terminate(). You handle errors, restarts, and resource cleanup.

For production Node.js services, pm2 is often preferred over raw cluster because it solves operational headaches out of the box.

🌐 Real-World Scenarios: Which Tool Fits?

Scenario 1: Background Email Sending in a Node.js API

You receive a user signup request and need to send a welcome email without blocking the response.

- ✅ Best choice:

bull - Why? Emails can fail and need retries. You want to decouple the web server from the SMTP client. Redis ensures durability.

Scenario 2: Scaling a Node.js HTTP Server Across 8 Cores

Your Express app is CPU-bound and you want to use all cores on a single machine.

- ✅ Best choice:

pm2(for production) orcluster(for minimal setups) - Why? Both fork processes, but

pm2adds restarts, logs, and zero-downtime deploys—critical for production.

Scenario 3: Running Image Processing in a React App

You let users upload photos and apply filters without freezing the UI.

- ✅ Best choice:

comlink+web-worker - Why?

comlinkmakes calling worker functions feel natural.web-workerensures the same code works in dev (browser) and test (Node via JSDOM or similar).

Scenario 4: Parallel CSV Parsing in a Node.js CLI Tool

You have a 1GB CSV and want to split parsing across threads to speed it up.

- ✅ Best choice:

threads - Why? It supports

SharedArrayBufferfor efficient data sharing and has a clean promise API.web-workerwould require manual message handling.

⚠️ Common Pitfalls and Misuses

- Using

bullfor frontend tasks:bullrequires Redis and runs in Node.js—it won’t help with browser UI jank. - Using

clusterfor non-I/O-bound work: If your app is CPU-heavy per request (e.g., real-time analytics),clusterhelps. But if it’s mostly waiting on DB calls, async/await is sufficient. - Assuming

comlinkeliminates serialization cost: It hidespostMessage, but data is still copied unless you use transferables. - Running

pm2in serverless environments: Platforms like AWS Lambda manage scaling for you—pm2adds unnecessary overhead.

📊 Summary Table

| Package | Primary Use Case | Environment | Parallelism Type | Data Sharing | Production Ready? |

|---|---|---|---|---|---|

bull | Job queueing | Node.js | Distributed (Redis) | JSON jobs | ✅ |

cluster | Multi-process HTTP servers | Node.js | Process-based | IPC (limited) | ⚠️ (basic) |

pm2 | Process management & clustering | Node.js | Process-based | External only | ✅ |

web-worker | Cross-env worker creation | Browser/Node | Thread-based | Structured clone | ✅ |

threads | High-level threading | Node.js | Thread-based | SharedArrayBuffer | ✅ |

comlink | Worker RPC abstraction | Browser/Node | Thread-based | Structured clone* | ✅ |

* With manual setup for transferables.

💡 Final Guidance

Ask yourself these questions:

- Am I in the browser or Node.js? → Eliminates half the options immediately.

- Do I need to defer work or just run it faster? → Queues (

bull) vs parallelism (threads,cluster). - Is this for development convenience or production resilience? →

pm2for production ops;clusterfor simple cases. - How much data am I moving? → Large buffers favor

threadswith shared memory; small messages work with any.

These tools aren’t competitors—they’re specialists. The right choice depends entirely on your runtime environment, workload type, and operational requirements.

- web-worker:

Choose

web-workerwhen you need a simple, cross-environment way to spawn Web Workers that works consistently in both browsers and Node.js (viaworker_threads). It abstracts environment differences so you can write worker code once. Use it for lightweight offloading of CPU-intensive tasks without the overhead of higher-level abstractions, but be prepared to handlepostMessagecommunication manually. - pm2:

Choose

pm2when you need a full-featured, production-grade process manager for Node.js applications. It handles clustering, automatic restarts, logging, monitoring, and zero-downtime reloads out of the box. Use it for deploying and maintaining long-running services where reliability, observability, and operational ease matter more than minimal footprint. - bull:

Choose

bullwhen you need reliable, persistent background job processing in Node.js with features like retries, prioritization, rate limiting, and delayed jobs. It’s ideal for decoupling time-consuming tasks (e.g., sending emails, processing uploads) from your main request flow using Redis as a message broker. Avoid it if you don’t already use Redis or if your workload doesn’t require queuing semantics. - comlink:

Choose

comlinkwhen you’re working with Web Workers (in browsers or Node viaworker_threads) and want to eliminate the boilerplate ofpostMessage-based communication. It lets you call worker functions as if they were local async methods, making complex worker interactions feel natural. It’s especially valuable in frontend apps that offload heavy computation (e.g., image processing, data parsing) to keep the UI responsive. - threads:

Choose

threadswhen you’re in a Node.js environment and need true parallelism usingworker_threadswith a clean, promise-based API. It simplifies sharing memory viaSharedArrayBufferand transferring data between threads. Prefer it over rawworker_threadswhen you want ergonomic thread management without dealing with low-level message passing, but note it’s Node-only and not suitable for browser contexts. - cluster:

Choose

clusterwhen you’re running a Node.js server on a multi-core machine and want to fork child processes to share a single port without external dependencies. It’s part of Node’s core, so it’s lightweight and integrates directly with your app. However, it lacks advanced features like zero-downtime reloads, health monitoring, or log aggregation—use it only for basic horizontal scaling within a single host.

web-worker

Native cross-platform Web Workers. Works in published npm modules.

In Node, it's a web-compatible Worker implementation atop Node's worker_threads.

In the browser (and when bundled for the browser), it's simply an alias of Worker.

Features

Here's how this is different from worker_threads:

- makes Worker code compatible across browser and Node

- supports Module Workers (

{type:'module'}) natively in Node 12.8+ - uses DOM-style events (

Event.data,Event.type, etc) - supports event handler properties (

worker.onmessage=..) Worker()accepts a module URL, Blob URL or Data URL- emulates browser-style WorkerGlobalScope within the worker

Usage Example

In its simplest form:

import Worker from 'web-worker';

const worker = new Worker('data:,postMessage("hello")');

worker.onmessage = e => console.log(e.data); // "hello"

| main.js | worker.js |

|---|---|

|

|

👉 Notice how new URL('./worker.js', import.meta.url) is used above to load the worker relative to the current module instead of the application base URL. Without this, Worker URLs are relative to a document's URL, which in Node.js is interpreted to be process.cwd().

Support for this pattern in build tools and test frameworks is still limited. We are working on growing this.

Module Workers

Module Workers are supported in Node 12.8+ using this plugin, leveraging Node's native ES Modules support. In the browser, they can be used natively in Chrome 80+, or in all browsers via worker-plugin or rollup-plugin-off-main-thread. As with classic workers, there is no difference in usage between Node and the browser:

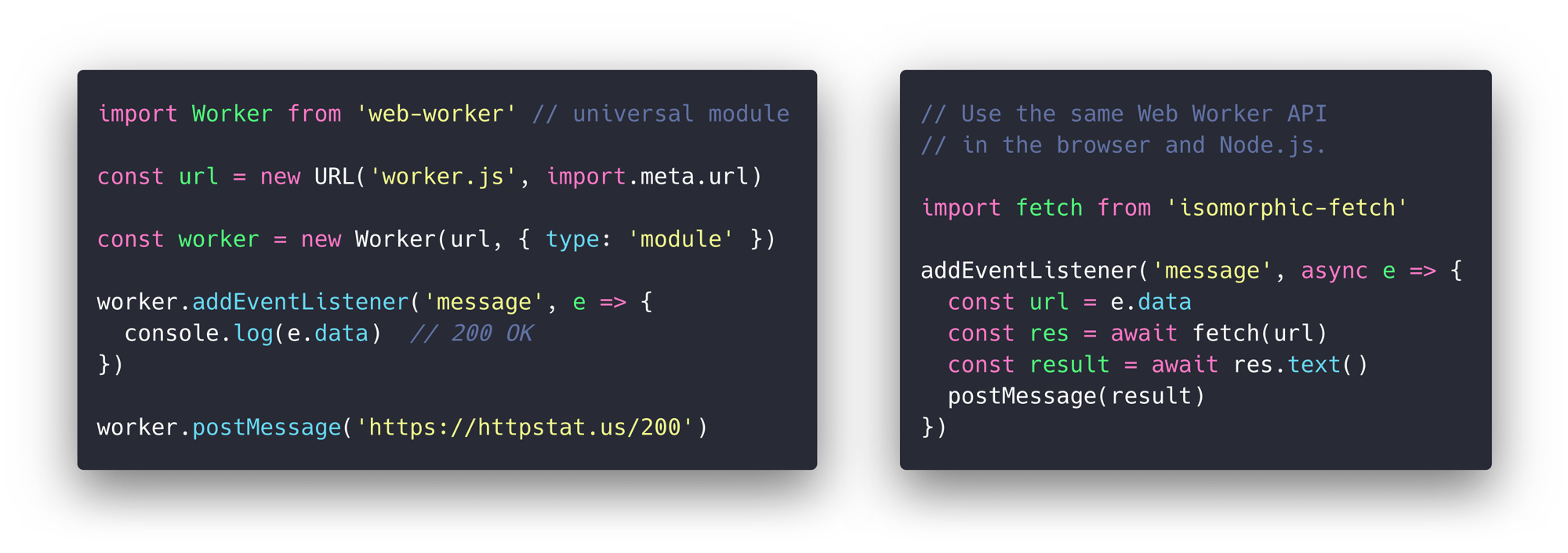

| main.mjs | worker.mjs |

|---|---|

|

|

Data URLs

Instantiating Worker using a Data URL is supported in both module and classic workers:

import Worker from 'web-worker';

const worker = new Worker(`data:application/javascript,postMessage(42)`);

worker.addEventListener('message', e => {

console.log(e.data) // 42

});

Special Thanks

This module aims to provide a simple and forgettable piece of infrastructure,

and as such it needed an obvious and descriptive name.

@calvinmetcalf, who you may recognize as the author of Lie and other fine modules, gratiously offered up the name from his web-worker package.

Thanks Calvin!